From Photo to Footprint:

Democratizing

Sustainable Eating with

AI-powered UX

From Photo to Footprint:

Democratizing

Sustainable Eating with

AI-powered UX

Yummy Emissions is an innovative project developed in partnership with the Digital Humanity Lab, Bern, Switzerland. The project leverages user-centered design and artificial intelligence (AI) to create a unique user experience that allows individuals to calculate the carbon footprint of their meals. By providing quantitative data in an engaging manner, Yummy Emissions aims to encourage sustainable food choices and promote better dietary practices.

This project highlights the power of AI for user-centered design. By leveraging image recognition, Yummy Emissions removes the barrier of manual data entry, making dietary footprint tracking effortless. This paves the way for innovative applications of AI that empower users to make positive choices through delightful experiences.

Talayeh Dehghani

The project was executed in collaboration with the Digital Humanity in Bern, Switzerland during my Phd Program, an organization committed to integrating technology with human-centered design to address contemporary global challenges. The lab provided the resources and support needed to develop a system that aligns with their mission of promoting sustainability through innovative technological solutions.

Climate change mitigation often involves complex strategies, but one accessible method is through dietary changes. The challenge lies in making carbon calculations straightforward and engaging for everyday users. Traditional methods of carbon footprint calculation are cumbersome and not user-friendly. This project addresses the need for a fast and user-centric system to estimate the carbon emissions of individual meals, thereby encouraging users to make more sustainable food choices.

Role

2 UX designers

1 Design Lead

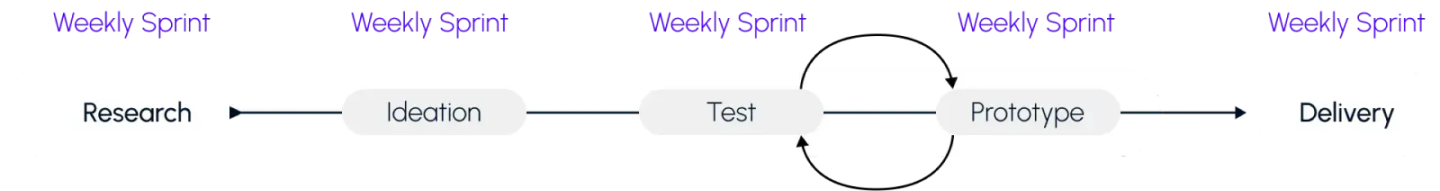

Throughout this project, we employed a data-driven process to ensure our design decisions were informed by user behavior and preferences. This approach not only strengthens the user experience but also allows for continuous improvement based on real-world data collected after the app's launch..

We created personas representing our target audience to understand their needs, interests, and pain points regarding reading and social interaction.

We explored core drives behind user engagement in games and identified three key factors to target: Development and Accomplishment, Curiosity and Unpredictability, and Ownership and Possession.

To better understand the landscape of existing solutions, we conducted a detailed competitive analysis. This involved examining current applications and tools that help users track their dietary carbon footprints. We assessed various factors such as usability, accuracy, user engagement, and visual design. By identifying strengths and weaknesses in existing solutions, we pinpointed opportunities for Yummy Emissions to innovate and offer unique value.

We also organized focus groups to gather in-depth insights and validate our concepts with potential users. Participants were selected based on diverse demographics and dietary habits to ensure a wide range of perspectives. During these sessions, we presented our initial ideas and prototypes, encouraging open discussions about user preferences, challenges, and expectations.

Once the ingredients and portions are identified, the AI system retrieves data on the carbon emissions associated with the production, processing, and transportation of each ingredient. These data points are compiled from reputable environmental databases and scientific research. The app then calculates the total carbon footprint of the meal by summing the emissions for all identified ingredients, adjusted for their respective portion sizes.

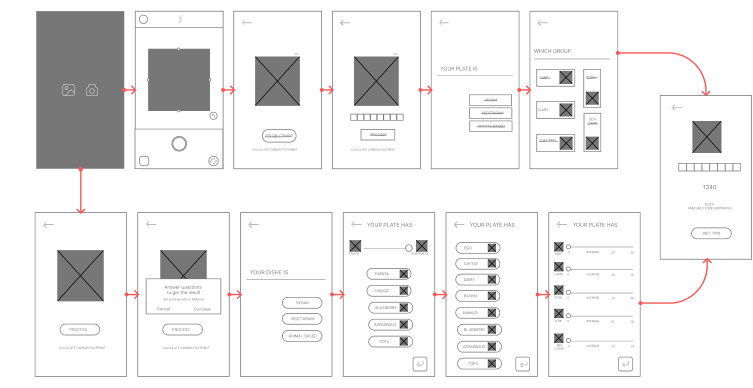

we utilized advanced AI image recognition technology to streamline the process of tracking the carbon footprint of users' meals. This innovative approach involved several key components and steps to ensure accurate and user-friendly carbon footprint estimation.engagement and improvement in their eating habits.

Low-Fidelity Prototypes: Initial prototypes focused solely on usability, omitting icons, images, and colors.

User Testing: Conducted several rounds of usability testing to gather feedback and make necessary adjustments.

UI Design: Finalized the visual design through quick iterations, ensuring a user-friendly interface that effectively incorporated the algorithm.

Through user-centered design, we developed a seamless experience. Gone are the days of tedious ingredient input; our app leverages image recognition technology to analyze your meal's composition. Beyond the initial estimate, we provide personalized suggestions for reducing your footprint in future meals. This empowers users to make informed dietary choices that contribute to a healthier planet, all while enjoying their food.